(Warning: I've had some coffee today)

In The Beginning

In 1990, at the age of 26, I worked as a drafter for a small Naval engineering firm in Virginia. It was my third job in the field of naval design, and I was assigned to work in the Piping Systems Division with maybe two dozen others, in support of contracts for overhauling U.S. warships. It was during this time that PC-based CAD entered the foray in the defense industry. ThisCAD and ThatCAD were everywhere, but AutoCAD was the eventual, and clear winner. Until then, everything with "CAD" in the name wasn't even considered unless it ran on UNIX-powered hardware.

While learning to use this new "AutoCAD" tool, I tripped over something and looked down to realize that inside this little product was a shiny gem called "AutoLISP". A customization programming tool, built right into the product! Having tinkered with CMD and Batch scripting for MS-DOS and Windows, I was addicted from that moment on to programming. Mainly because it made it possible to draw and "create" visible objects on the screen, rather than a bunch of numbers and text.

After a few weeks, I built some menus, functions (or "routines", as they were often called then), and eventually wrapped them in dialog forms and prettier stuff. After sharing them with my coworkers, I began to get feedback and ideas started coalescing like a tropical storm into a hurricane. The momentum continued to build and within a few months I had a complete "design package" for automating much of the tasks involved with creating and validating engineering and design drawings for piping systems.

Auto-Something-or-other

Not long after that threshold was crossed, I spread out into HVAC systems, and eventually into the other primary system groups involved with nautical engineering: Structure, Outfitting, Machinery, Electrical and Electronics. Then it was on to building the top of this strange pyramid: Notes, References, Sheet Formats, Materials Lists, Tables, and so on. In much the say a Lego kit ends up becoming a city with elevated monorails and skyscrapers around a kid's room, I ended up gluing in data files, database tables and views, symbol tables, icon files, drawing parts (block inserts, XREFs, etc.). Building on top of what a predecessor from our New York office had started, it became an entirely new animal.

My boss was supportive, as was the Department Manager, and the Division Manager. But once it cleared the cloud layer, things got less clear. I never asked for a raise or a promotion, oddly enough. All I asked for was the approval to tap a few key "power users" in each department to form a "team" to help improve this automation tool even further and faster. Silence.

In 1996, I was contacted by a much-larger company, a nearby shipyard, to take on a newly created role of "AutoCAD Systems Manager" for an entire Division. That meant a lot of things at once for me: Automating the deployment (installation), configuration, maintenance and licensing of AutoCAD and AutoCAD Mechanical Desktop to roughly 1,300 users. There were other Divisions, but they were tied to UNIX products and rebuffed any consideration of anything that ran on a scruffy PC. This offer also meant I'd take over licensing administration (i.e. FLEXlm), and my prized role: Customization. Oh yeah, it also meant a considerable pay increase and better benefits, but customization was what I had my eyes on the entire time.

Project: Mariner

Within a few months of that new job, I began building an entirely new suite-based, collection of design automation tools to run on AutoCAD and MDT for Piping, HVAC, Mechanical, Hull-Structure, Hull-Outfitting, Electrical, and Materials. This new beast grew a beard and a deeper voice and eventually was named "Mariner". A fitting name I thought. I sure get wrapped around the axle when it comes to choosing a name for software projects, but that's for another story.

This process continued to grow and I was allowed to form an unofficial "team" to help maintain and improve it as well. Once again, I never asked for a raise or promotion, but things seemed to progress much more easily.

Sometime in late 1999, this shipyard began contracting in designers from local firms to handle the capacity of work going on. The contractors were required to learn this new abstraction layer, so I embarked on developing a training guide, a training course and even was authorized to issue training certificates for completion of the training. Seriously, they printed some 1400 books with color graphics and sturdy permanent binder edges. Nifty stuff!

Project: ShipWorks

In early 2000, one of the contractors asked if they could license this "Mariner" product to use back in their offices. The rationale at the time involved a lack of physical space at the shipyard to bring in any more contractors, while the workload continued to rise. I approached the corporate overlords, their legal masters and the contracts department gurus and soon there was a "first-ever" licensing contract produced to allow their "partners" to use this product. Until then, no other such vehicle had existed, or so I was told. Then, I inquired about approaching Autodesk or some other (no defunct) software vendor, to help take it to the next logical level: external marketing. There was interest from nearly all of the outside contracting firms, as well as several software vendors. The company said: "NO. We are not a software development company."

Growing tired of the lack of management support, I accepted an offer to work for a local Autodesk product reseller. I submitted my two-weeks notice and packed my belongings to move on to yet another employer. On my last Friday, I received a phone call from the contracting firm that had initially approached our company about licensing Mariner. They heard I was leaving and they counter-offered and, me being stunned and shocked, I accepted it. I went to that new employer and, again, started development on a totally new product, incorporating all of the lessons-learned from the Mariner project. This animal grew into something called "ShipWorks". Much to the chagrin of Autodesk, it was not ever intended to run on Solidworks, nor was it ever attempted. Still, they were obviously not too happy about the "works" suffix. Just an odd side note now, I think.

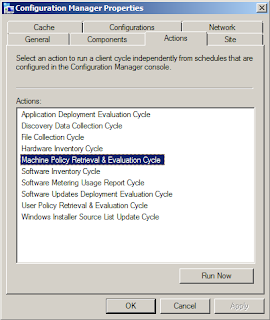

That leg of my journey into the software technology world is where I officially transitioned from a mostly-engineering environment into a mostly-IT environment. I absorbed managing Windows Server, SMS and Configuration Manager, WSUS, RIS and WDS and a whole bunch of other weird things that I found interesting and helpful, and which helped cut costs and make for a better computing environment.

In this new role, I was given a team, management support, resources and things finally to be on a good track. Then in 2007, the company was sold and split apart. I ended up bouncing to a consulting firm, which lasted about three months, when the economy tanked, and I had to make ends meet doing side work for a few months before crawling on my knees back to the shipyard and beg for my job back. They graciously accepted.

From here on, I haven't touched AutoCAD much at all. Most of the work I've done since involves things like ASP or PHP, along with SQL Server, Oracle, Active Directory, SMS or Configuration Manager, Inventory systems, Service Request systems, and so on. Basically, gluing things together horizontally with a big bucket of sticky web application goo.

Looking Back

Every one of the places I've worked at, I've built something custom to help them operate more efficiently and tried to make the users happy with the results. In every case, my immediate supervisors were very supportive. In every case, when it went above my immediate supervisors things got shaky and less reaffirming. The support and reinforcement began to vaporize the higher I went.

The Takeaway

Over the past twenty-odd years, I've seen more potential wasted because someone decided a project was not worthy of basic consideration. Not even giving it a second thought. The results could have been astoundingly helpful for a lot of people and businesses.

Too quick to judge, was always the culprit to killing the dream before it could begin to take shape. I'm writing this today because I still see this happen too often, in too many places.

If you have a lone developer, or a small team of developers, within your business, official or not, and they are actually producing useful things,

support them. Especially if they don't ask for monitory compensation, but they simply want to see that management cares and wants to help them push it further. Maybe it's outside of your "core business" comfort zone. Maybe you never considered your business to include this mysterious thing called "applications development". Try making it work anyway. You say you have "gut instincts" for business, well, use them. You might be amazed what good can come from it. I'm not suggesting you rubber-stamp every app-dev project without checking on it's merits. Verify and validate them all. But just don't reject them simply because they involve "application development". That's an unforgivable crime of business.

Cheers.

Case in point:

Case in point: