Intro: I just took the wraps of this particular "feature" within a web application project I've been working on for some time now. So I figured it was a good time to share some thoughts about why I spent the time and effort to make it work. I'm not going to say it's 100% complete yet, and I still have some features to fill-out, but it's walking on two legs and says "Daddy!" so I'm kind of proud of it. I actually submitted this for another blog site but it was rejected as not being within the topic set they prefer, so I'm posting it here.

A Little Background

Anyone who grew up watching the

original Star Trek series on TV should recall a particularly famous line quoted

by Spock, where he said "Crude, but effective".

The implication made was that a “solution” doesn't always have to be

elegant or optimal in order to be sufficient.

Hence the name of this article for the mini-project I’m about to

describe and bore you to death. So, let’s get started!

One of the most widely-used

tools in the world of Microsoft enterprise systems management, is System Center Configuration Manager. One of the most widely-used tools to extend

the functionality of Configuration Manager is (or are) the "SCCM Right-Click Tools", developed and

supported by Rick Houchins (link).

The tool-set installs a set of

scripts, and some XML extensions to the MMC console snap-in for Configuration

Manager. The result is an additional set

of pop-out menus when you right-click on resources in the MMC console. They are grouped into "Tools", "Actions", "Log Files" and so on, each having a set of links to perform useful tasks, upon

a single resource (computer) or all of the resources in a selected Collection.

Some of the features it provides include:

●

Invoke ConfigMgr Agent actions such as:

○

Hardware (and Software) Inventory

○

Machine (and User) Policy Retrieval and Evaluation

○

Discovery Data Collection Cycle

○

More

●

Run Client Tools such as:

○

Restart ConfigMgr Agent service

○

Uninstall/Re-install ConfigMgr Client

○

Re-Run Advertisements

●

View Client Log Files

●

View Reports for selected Clients or Collections

There are quite a few versions

of this out in the wild, and I've rarely seen, or heard of two IT shops using

the same (or even latest) version.

Regardless, Rick's product has become so popular and widely-known,

that's it’s hard to find a ConfigMgr Administrator anywhere in the world that hasn't heard of it, let alone one that doesn't use it every day. It’s even spawned inspired projects such as

Client Tools (link) and SCCM Client Actions Tool (link). Some have taken off, while others have not. Ultimately, it's a good thing to inspire

others to try good things for the good of others, is it not?

One of the larger projects I've been working on for the past year is a web-based tool for integrating and

managing multiple enterprise "islands" of information to achieve an holistic

management tool. This involves

Configuration Manager, Active Directory, legacy inventory management systems,

multiple databases, and rolls all of that into a Role-Based Access Control interface

that maps the features to the discrete functional groups within their IT

department, as well as specific features made available to end users.

Some of you might wonder if

this has anything to do with my old "Windows Web Admin" project that I killed a

while ago. The answer to that is "yes". WWA formed the basis of this project, but if

WWA was 1.0, this project is approximately 5.0.

There’s a lot of change and scaling out in this one, but it's genesis

was WWA. Okay, enough of that. Let's

move on...

One of the most daunting

challenges that I've been trying to solve is how to incorporate my own set of

"client tools" into the web interface.

Why is this so difficult?

Primarily, the biggest concern is security risk and exposure. There are quite a few potential ways to

approach this, but let’s break it down in the most basic terms:

The Goal

The goal of this particular

subset of the project is to be able to directly invoke processes on remote

computers over a network connection, and initiate this from within a web

browser. Some aspects of the Right-Click

tools are easy to implement via a web interface, such as exploring the C:

drive, opening the remote log or cache folder, and ping for connectivity

testing. But the features which require

invoking a WMI or WBEM/SWBEM interface remotely are a little more complicated

to achieve from within a local web browser session. At least they are for my limited set of

abilities.

In the simplest terms, WBEM, or

Web-Based Enterprise Management, is the web interface for WMI services on a

given computer. WBEM is the mechanism by

which you connect to, and interact with, the ConfigMgr client on a remote

computer. It’s also how you connect to,

and interact with the site server, but that’s for another article.

WMI and WBEM can be a little

complicated to describe, but that’s not necessary for this article. But you do need at least a basic

understanding of WBEM as it pertains to "what it is", so that you can

appreciate what’s going on under the hood when you turn the key and start this

beast up.

The good news is that you don’t

have to roll up your sleeves and get dirty with programming code in order to

leverage WBEM's benefits. There are

packaged utilities that can do the messy work for you, such as the

SendSchedule.exe utility included with the Microsoft ConfigMgr Toolkit v2.

There are probably more

potential "options" to solving this dilemma, but I've boiled it down to three:

Option 1 - Client-Side Code

It could be done with some

JavaScript code with JSON or JQuery, or whatever, running as a client-side process (on the computer

where the browser is active). This makes

it possible to run in the context of the logged on user.

The problem with the

client-side script option is security context and "sand-boxing" with respect to

invoking other local scripts, or an executable, under the logged-on user

context. There's also the challenge of

maintaining centralized access control and logging. The security model in this

scenario relies on individual user accounts having permissions to invoke remote

interfaces like the ConfigMgr Client Agent service. This isn't a bad thing however, but it does

depend on diligent administration of an AD security group.

Option 2 - Server-Side Code

It could be done with

server-side code, but that would involve forked or marshaled processes running

under the context of a proxy account. Or

it could be run in the context of the IIS application pool, or even the IIS web

site.

The biggest problem with the

server-side code approach is the use of a proxy user account, and controlling

access to the folders and files in which the user context can execute. The security model in this scenario is a

single "proxy" user account, with permissions granted to allow it to invoke

remote interfaces on client computers.

Option 3 - A Real Developer

It could also be done with

custom programming using .NET or Java and a compiled executable or even a

browser add-in.

The security model in this

scenario could be either of the two described in the first two options above,

or even a hybrid of both of them.

However, the less obvious "problem" with this approach comes down to complexity, time and resources. Very often the fourth issue is budget.

In our case, we don’t have this as a viable option at our disposal. What we do have at our disposal is....

me.

That’s right. Simple. Basic. Me. My skill set is not the

most robust on Earth, big shock, I know, but it does contain enough database,

and coding skills, and a fetish for application design, to be dangerous. And if you (ok, I) add a pinch of stupidity,

sarcasm and bad humor, and a teaspoon of caffeine to the mix, you have a

concoction that get it done. So this led

me to option 4...

Option 4 - Duct Tape, Chewing Gum, and Bailing Wire

The old McGyver approach. This is actually a very old

method, but it's a tried-and-true method, that has stood the test of time and

many, many projects. It's the old

"web-database-scheduler" approach. Let

me digress...

In the most basic terms

possible:

There’s a web interface for submitting the requested "action" to be performed

on a remote client. This captures the basic

information: the client (or collection) name, and the action to be

performed. Before you start flapping

away about which language is "best" for this role, I’ll just gently close your

lips with my greasy fingers, encased in old welding gloves, and whisper: "shhhhhhhh... it doesn’t really matter." It's true. You could crank this out using

PHP, ASP, ASP.NET, Ruby, Python, Mython, Yourthon, Therethon or

Whateverthon. As long as it can display

a web form in a browser session, collect the input, and interact with a

database to store the information, you’re good to go.

Next, there's a database table for storing the

submitted requests entered from the web form.

This includes the client name, the action to be performed, as well as

who requested it, and when (date and time), and task-related things like

"is-completed" and when, along with other optional pieces of information.

Then there's a scheduled task, which reads the

database table, on a frequent and recurring schedule, fetching only those rows

which have not already been processed (completed), and executes the requested

action on the specified remote computer.

After each task is completed, the corresponding row in the database

table is updated to indicate it was completed and time-stamped. This is what effectively prevents the entire

process from melting down by re-running every row every time.

So, putting this all together,

you get a process that works like this:

1

Authorized user of the web site opens a web page for a

particular computer or Collection of computers, and clicks a button/link for

"Client Tools". This opens a web form with a list of available

"actions" to perform on the computer(s) remotely. User selects the desired action and clicks

"Submit". The information is then

entered into a database table. In my case, I'm using SQL Server 2008

R2. But you could use Oracle, MySQL,

Sybase, Informix, DB2, or just about anything that’s "robust" enough to support

a business environment with multiple users.

2

The scheduled

task, running under the context of a proxy account with permissions to

invoke client agent actions remotely, executes a script on the next cycle. The script

reads all rows which are not yet marked as being completed. Iterating through the set of rows, it reads

the name of the computer to be acted upon, and the requested "action" to

invoke. The script checks for

connectivity to the remote computer, and then executes the remote action using

either SWBEM interface (via COM or .NET), or in the case of my lazy-ass

approach: executes the SendSchedule.exe utility

(included with the ConfigMgr Toolkit v2 download).

After running the task, it updates the row to set the "completed" field

and enters a time-stamp to indicate when it was processed.

3

The remote

client receives the request from the remote script execution, under the

user context of the scheduled task/job that launched it. It then verifies authentication and, if

allowed, invokes the client action or other (possibly) custom task.

Clunky? Yep.

Complicated? Not really (I've seen things MUCH more complicated doing

much less). Could it be done more simply

or more elegantly? You betcha!

Some Advantages

So, what additional benefits does this approach provide? For starters, since the action is really based on a SQL database repository, and a job scheduler, I have a centralized model. That means I have the means to log everything going on. Now, instead of every console-user running a local task, with log files on their computer and the remote computers, everything is in one place, where it's easy to sort and manage and get useful reports out. It's also easy to apply a security model to restrict access in one place at one time. I'm not going to say web applications are a panacea, but they do offer some very attractive capabilities.

Here's a few screen shots of it. The first image is the Resource details view, which is showing the general "Computer System" properties. The "Client Tools" button is at the upper-right corner.

After clicking the "Client Tools" button, the pop-up form is shown (below). Right now, I only have three of the Client Actions exposed, not because there's a problem with them, but because I'm working on role-based filtering of features. The user session for this example doesn't have access to the other actions.

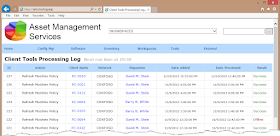

The image below is the log report, which captures every submitted request and shows when it was processed and the result.

Here's a few screen shots of it. The first image is the Resource details view, which is showing the general "Computer System" properties. The "Client Tools" button is at the upper-right corner.

The image below is the log report, which captures every submitted request and shows when it was processed and the result.

Conclusion Contusion

Could this all have been a different/better way? I'm sure it could have, but I'm working against two huge constraints: time and skill set. Time is very limited and my skill set is still mostly ASP/SQL. I've done a lot with PHP also, but in this environment it didn't make sense to shoehorn it in. I used to work with ASP.NET for a brief period, but that was a while ago and I haven't had the opportunity to brush up on the newer technologies. I know: excuses-excuses. Feh.

The third constraint is budget. Budgets

are awesome. If only we had one. For now, duct tape and chewing gum will do

just fine.

No comments:

Post a Comment